Tutorial de Spark: Aprendiendo Apache Spark#

Adaptado de GitHub

Durante este tutorial cubriremos:#

Parte 1: Uso básico del cuaderno e integración con Python#

Parte 3: Uso de RDDs y encadenamiento de transformaciones y acciones#

Parte 4: Funciones Lambda#

Parte 5: Acciones adicionales de RDD#

Parte 6: Transformaciones adicionales de RDD#

Parte 7: Caché de RDDs y opciones de almacenamiento#

Las siguientes transformaciones serán cubiertas:#

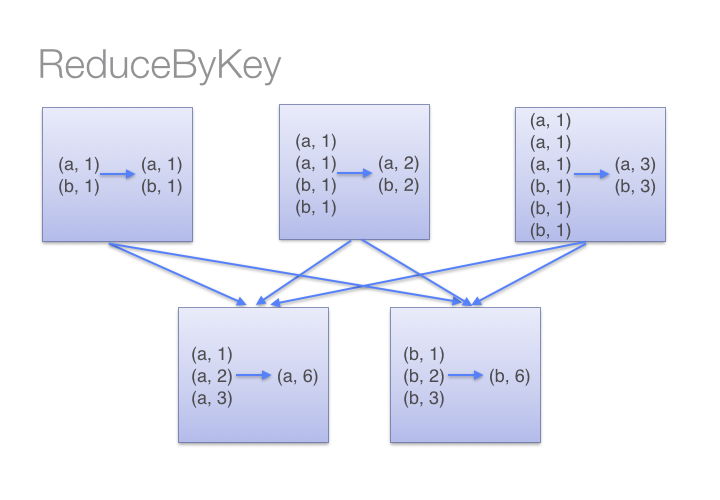

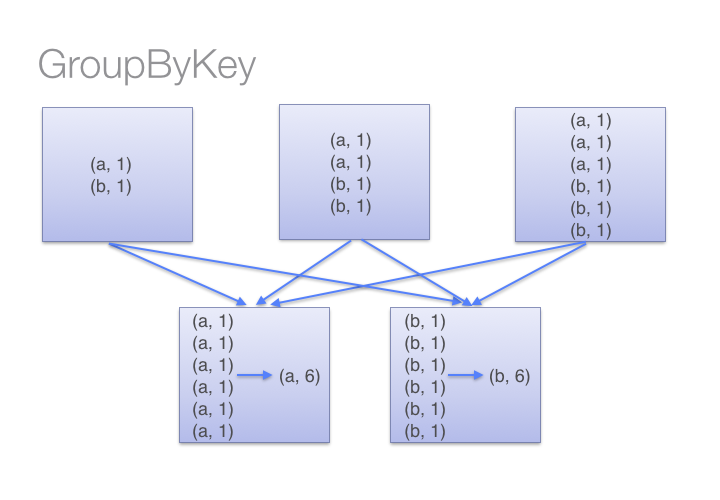

map(),mapPartitions(),mapPartitionsWithIndex(),filter(),flatMap(),reduceByKey(),groupByKey()

Las siguientes acciones serán cubiertas:#

first(),take(),takeSample(),takeOrdered(),collect(),count(),countByValue(),reduce(),top()

También cubierto:#

cache(),unpersist(),id(),setName()

Nota que, para referencia, puedes consultar los detalles de estos métodos en la API de Python de Spark#

Part 0: Google Colaboratory environment set up#

# Download Java

!apt-get install openjdk-8-jdk-headless -qq > /dev/null

# Next, we will install Apache Spark 3.0.1 with Hadoop 2.7 from here.

!wget https://dlcdn.apache.org/spark/spark-3.5.6/spark-3.5.6-bin-hadoop3.tgz

# Now, we just need to unzip that folder.

!tar xf spark-3.5.6-bin-hadoop3.tgz

# Setting JVM and Spark path variables

import os

os.environ["JAVA_HOME"] = "/usr/lib/jvm/java-8-openjdk-amd64"

os.environ["SPARK_HOME"] = "/content/spark-3.5.6-bin-hadoop3"

# Installing required packages

!pip install pyspark==3.5.6

!pip install findspark

import findspark

findspark.init()

--2025-09-13 19:13:18-- https://dlcdn.apache.org/spark/spark-3.5.6/spark-3.5.6-bin-hadoop3.tgz

Resolving dlcdn.apache.org (dlcdn.apache.org)... 151.101.2.132, 2a04:4e42::644

Connecting to dlcdn.apache.org (dlcdn.apache.org)|151.101.2.132|:443... connected.

HTTP request sent, awaiting response... 200 OK

Length: 400923510 (382M) [application/x-gzip]

Saving to: ‘spark-3.5.6-bin-hadoop3.tgz’

spark-3.5.6-bin-had 100%[===================>] 382.35M 302MB/s in 1.3s

2025-09-13 19:13:35 (302 MB/s) - ‘spark-3.5.6-bin-hadoop3.tgz’ saved [400923510/400923510]

Collecting pyspark==3.5.6

Downloading pyspark-3.5.6.tar.gz (317.4 MB)

━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━ 317.4/317.4 MB 4.3 MB/s eta 0:00:00

?25h Preparing metadata (setup.py) ... ?25l?25hdone

Requirement already satisfied: py4j==0.10.9.7 in /usr/local/lib/python3.12/dist-packages (from pyspark==3.5.6) (0.10.9.7)

Building wheels for collected packages: pyspark

Building wheel for pyspark (setup.py) ... ?25l?25hdone

Created wheel for pyspark: filename=pyspark-3.5.6-py2.py3-none-any.whl size=317895798 sha256=3223be3a1f0f0f064c32140c9d086f7bdfa796afc20b50dcdb8174976e239a73

Stored in directory: /root/.cache/pip/wheels/64/62/f3/ec15656ea4ada0523cae62a1827fe7beb55d3c8c87174aad4a

Successfully built pyspark

Installing collected packages: pyspark

Attempting uninstall: pyspark

Found existing installation: pyspark 3.5.1

Uninstalling pyspark-3.5.1:

Successfully uninstalled pyspark-3.5.1

Successfully installed pyspark-3.5.6

Collecting findspark

Downloading findspark-2.0.1-py2.py3-none-any.whl.metadata (352 bytes)

Downloading findspark-2.0.1-py2.py3-none-any.whl (4.4 kB)

Installing collected packages: findspark

Successfully installed findspark-2.0.1

# Crear un SparkContext (sc)

from pyspark import SparkContext

sc = SparkContext("local", "example")

Parte 1: Uso básico del cuaderno e integración con Python#

(1a) Notebook usage#

####Un cuaderno está compuesto por una secuencia lineal de celdas. Estas celdas pueden contener markdown o código, pero no mezclaremos ambos en una sola celda. Cuando se ejecuta una celda de markdown, se muestra texto formateado, imágenes y enlaces, al igual que HTML en una página web normal. El texto que estás leyendo ahora es parte de una celda de markdown. Las celdas de código de Python te permiten ejecutar comandos arbitrarios de Python, como en cualquier intérprete de Python. Coloca tu cursor dentro de la celda a continuación y presiona «Shift» + «Enter» para ejecutar el código y avanzar a la siguiente celda. También puedes presionar «Ctrl» + «Enter» para ejecutar el código y permanecer en la celda. Estos comandos funcionan igual en celdas de markdown y de código.

# Esta es una celda Python. Puedes ejecutar código Python normal aquí...

print('The sum of 1 and 1 is {0}'.format(1+1))

The sum of 1 and 1 is 2

# Aquí hay otra celda de Python, esta vez con una declaración de variable (x) y una sentencia if:

x = 42

if x > 40:

print('The sum of 1 and 2 is {0}'.format(1+2))

The sum of 1 and 2 is 3

(1b) Estado del cuaderno#

A medida que trabajas en un cuaderno, es importante que ejecutes todas las celdas de código. El cuaderno es con estado, lo que significa que las variables y sus valores se conservan hasta que el cuaderno se desconecta (en Databricks Cloud) o se reinicia el kernel (en cuadernos IPython). Si no ejecutas todas las celdas de código a medida que avanzas por el cuaderno, tus variables no se inicializarán correctamente y el código posterior podría fallar. También necesitarás volver a ejecutar cualquier celda que hayas modificado para que los cambios estén disponibles en otras celdas.#

# Esta celda depende de que x ya esté definida.

# Si no ejecutáramos las celdas de la parte (1a) este código fallaría.

print(x * 2)

84

(1c) Importar Librerias#

Podemos importar bibliotecas estándar de Python (módulos) de la manera habitual. Una declaración import importará el módulo especificado. En este tutorial y en futuros laboratorios, proporcionaremos las importaciones necesarias.#

# Importar la biblioteca de expresiones regulares

import re

m = re.search('(?<=abc)def', 'abcdef')

m.group(0)

'def'

# Importar la biblioteca datetime

import datetime

print('This was last run on: {0}'.format(datetime.datetime.now()))

This was last run on: 2025-09-06 15:59:22.703444

Parte 3: Uso de RDDs y encadenamiento de transformaciones y acciones#

Trabajando con tu primer RDD#

En Spark, primero creamos un Conjunto de Datos Distribuidos Resilientes (RDD) base. Luego, podemos aplicar una o más transformaciones a ese RDD base. Un RDD es inmutable, por lo que una vez creado, no se puede cambiar. Como resultado, cada transformación crea un nuevo RDD. Finalmente, podemos aplicar una o más acciones a los RDDs. Nota que Spark utiliza la evaluación perezosa, por lo que las transformaciones no se ejecutan realmente hasta que ocurre una acción.#

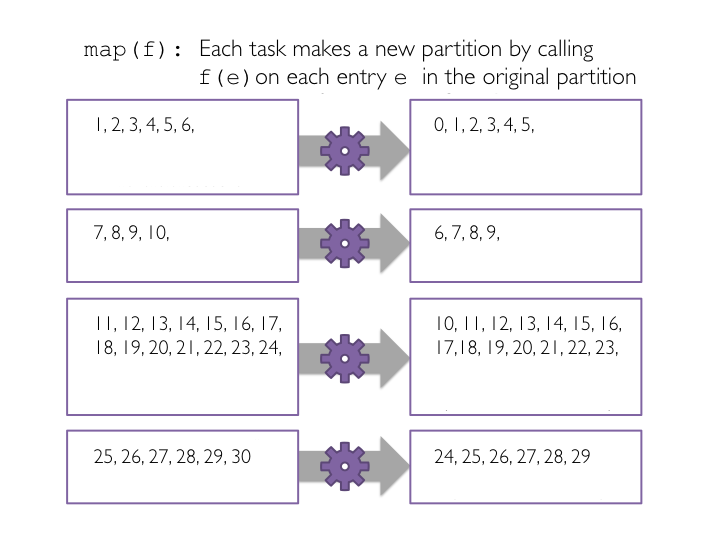

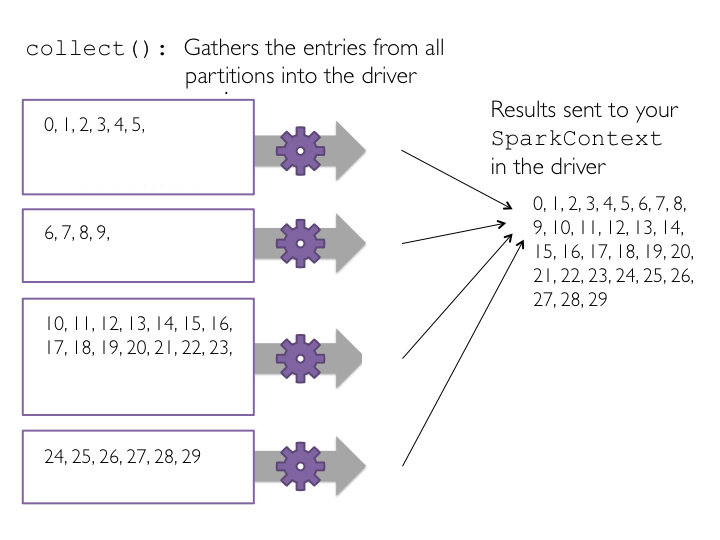

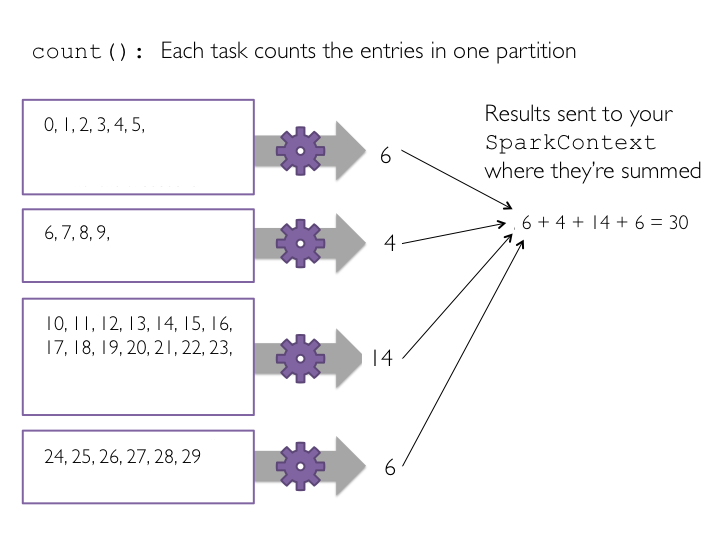

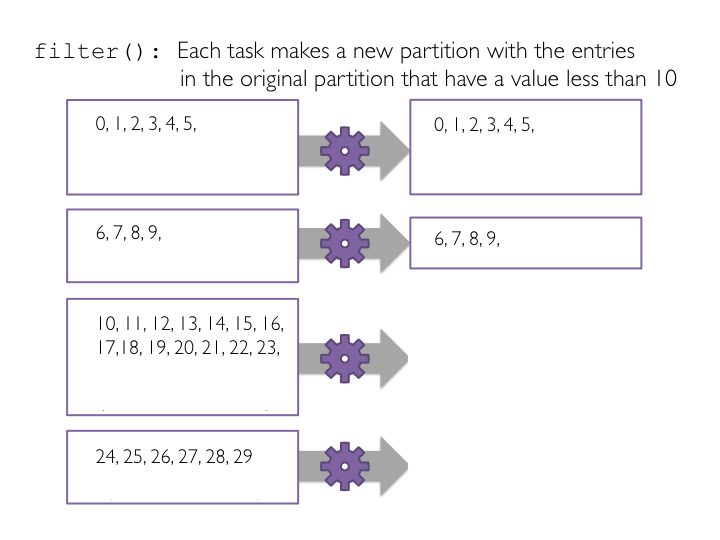

Realizaremos varios ejercicios para obtener una mejor comprensión de los RDDs:#

Crear una colección de Python de 10,000 enteros

Crear un RDD base de Spark a partir de esa colección

Restar uno de cada valor usando

mapRealizar la acción

collectpara ver los resultadosRealizar la acción

countpara ver los conteosAplicar la transformación

filtery ver los resultados concollectAprender sobre funciones lambda

Explorar cómo funciona la evaluación perezosa y los desafíos de depuración que introduce

(3a) Crear una colección de enteros en Python en el rango de 1 a 10000#

Usaremos la función xrange() para crear una lista de enteros. xrange() solo genera valores a medida que se necesitan. Esto es diferente del comportamiento de range(), que genera la lista completa al ejecutarse. Debido a esto, xrange() es más eficiente en memoria que range(), especialmente para rangos grandes.#

data = range(1, 10001)

print(data)

range(1, 10001)

# Los datos son una lista normal de Python

# Obtener el primer elemento de los datos

data[9999]

10000

# Podemos comprobar el tamaño de la lista utilizando la función len()

len(data)

10000

(3b) Datos distribuidos y uso de una colección para crear un RDD#

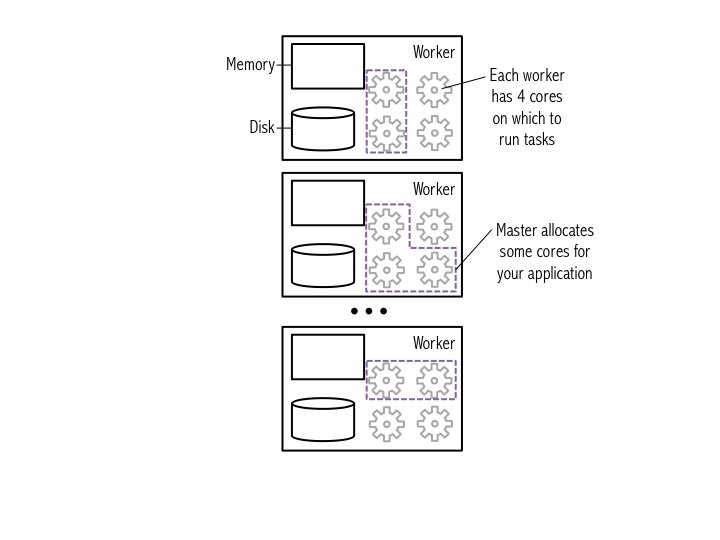

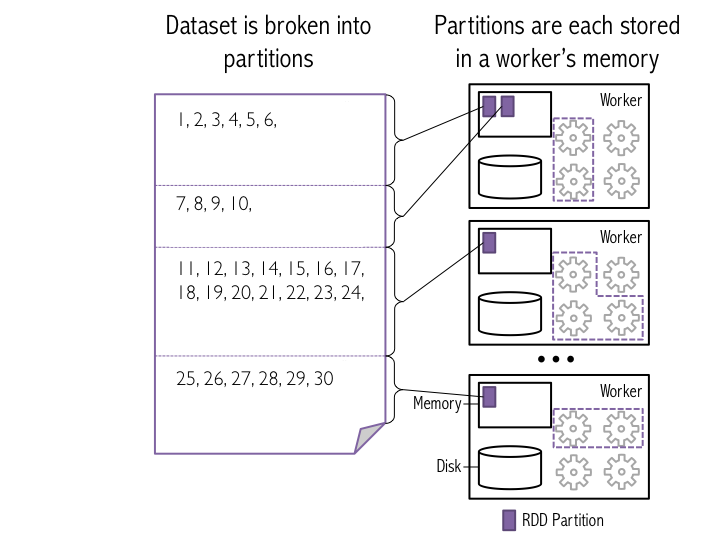

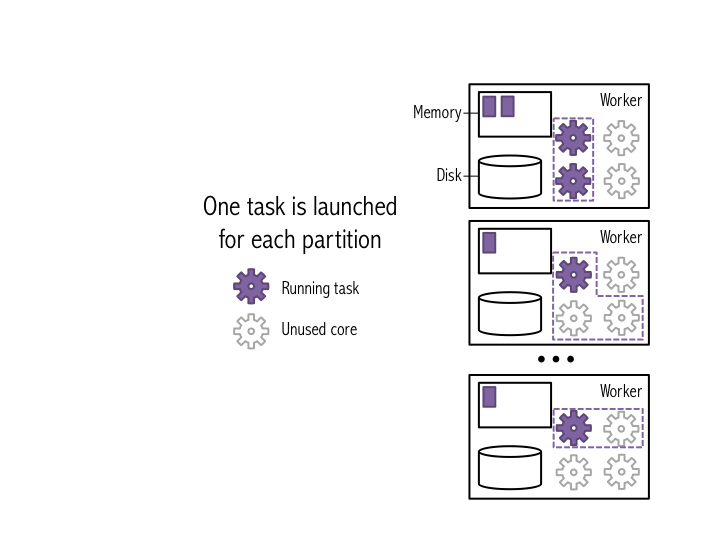

En Spark, los conjuntos de datos se representan como una lista de entradas, donde la lista se divide en muchas particiones diferentes que se almacenan en diferentes máquinas. Cada partición contiene un subconjunto único de las entradas en la lista. Spark llama a los conjuntos de datos que almacena «Conjuntos de Datos Distribuidos Resilientes» (RDDs).#

Una de las características definitorias de Spark, en comparación con otros marcos de análisis de datos (por ejemplo, Hadoop), es que almacena datos en memoria en lugar de en disco. Esto permite que las aplicaciones de Spark se ejecuten mucho más rápido, ya que no se ven ralentizadas por la necesidad de leer datos del disco.#

La figura a continuación ilustra cómo Spark divide una lista de entradas de datos en particiones que se almacenan en memoria en un trabajador.#

Para crear el RDD, usamos sc.parallelize(), que le dice a Spark que cree un nuevo conjunto de datos de entrada basado en los datos que se pasan. En este ejemplo, proporcionaremos un xrange. El segundo argumento del método sc.parallelize() le dice a Spark en cuántas particiones dividir los datos cuando los almacena en memoria (hablaremos más sobre esto más adelante en este tutorial). Ten en cuenta que para un mejor rendimiento al usar parallelize, se recomienda xrange() si la entrada representa un rango. Esta es la razón por la cual usamos xrange() en 3a.#

Hay muchos tipos diferentes de RDDs. La clase base para los RDDs es pyspark.RDD y otros RDDs heredan de pyspark.RDD. Dado que los otros tipos de RDD heredan de pyspark.RDD, tienen las mismas API y son funcionalmente idénticos. Veremos que sc.parallelize() genera un pyspark.rdd.PipelinedRDD cuando su entrada es un xrange, y un pyspark.RDD cuando su entrada es un range.#

Después de generar RDDs, podemos verlos en la pestaña «Storage» de la interfaz web. Notarás que los nuevos conjuntos de datos no se enumeran hasta que Spark necesita devolver un resultado debido a la ejecución de una acción. Esta característica de Spark se llama «evaluación perezosa». Esto permite que Spark evite realizar cálculos innecesarios.#

# Paraleliza los datos usando 8 particiones

# Esta operación es una transformación de datos en un RDD

# Spark utiliza la evaluación perezosa, por lo que no se ejecutan trabajos Spark en este punto

xrangeRDD = sc.parallelize(data, 8)

# Veamos la ayuda sobre paralelizar

help(sc.parallelize)

Help on method parallelize in module pyspark.context:

parallelize(c: Iterable[~T], numSlices: Optional[int] = None) -> pyspark.rdd.RDD[~T] method of pyspark.context.SparkContext instance

Distribute a local Python collection to form an RDD. Using range

is recommended if the input represents a range for performance.

.. versionadded:: 0.7.0

Parameters

----------

c : :class:`collections.abc.Iterable`

iterable collection to distribute

numSlices : int, optional

the number of partitions of the new RDD

Returns

-------

:class:`RDD`

RDD representing distributed collection.

Examples

--------

>>> sc.parallelize([0, 2, 3, 4, 6], 5).glom().collect()

[[0], [2], [3], [4], [6]]

>>> sc.parallelize(range(0, 6, 2), 5).glom().collect()

[[], [0], [], [2], [4]]

Deal with a list of strings.

>>> strings = ["a", "b", "c"]

>>> sc.parallelize(strings, 2).glom().collect()

[['a'], ['b', 'c']]

# Veamos qué tipo devuelve sc.parallelize()

print('type of xrangeRDD: {0}'.format(type(xrangeRDD)))

# ¿Y si usamos un rango

dataRange = range(1, 10001)

rangeRDD = sc.parallelize(dataRange, 8)

print('type of dataRangeRDD: {0}'.format(type(rangeRDD)))

type of xrangeRDD: <class 'pyspark.rdd.PipelinedRDD'>

type of dataRangeRDD: <class 'pyspark.rdd.PipelinedRDD'>

# Cada RDD recibe un ID único

print('xrangeRDD id: {0}'.format(xrangeRDD.id()))

print('rangeRDD id: {0}'.format(rangeRDD.id()))

xrangeRDD id: 2

rangeRDD id: 3

# Podemos nombrar cada RDD recién creado usando el método setName()

xrangeRDD.setName('My first RDD')

My first RDD PythonRDD[2] at RDD at PythonRDD.scala:53

# Veamos el linaje (el conjunto de transformaciones) del RDD usando toDebugString()

print(xrangeRDD.toDebugString())

b'(8) My first RDD PythonRDD[2] at RDD at PythonRDD.scala:53 []\n | ParallelCollectionRDD[0] at readRDDFromFile at PythonRDD.scala:289 []'

# Usemos help para ver que métodos podemos llamar en este RDD

help(xrangeRDD)

Help on PipelinedRDD in module pyspark.rdd object:

class PipelinedRDD(RDD, typing.Generic)

| PipelinedRDD(prev: pyspark.rdd.RDD[~T], func: Callable[[int, Iterable[~T]], Iterable[~U]], preservesPartitioning: bool = False, isFromBarrier: bool = False)

|

| Examples

| --------

| Pipelined maps:

|

| >>> rdd = sc.parallelize([1, 2, 3, 4])

| >>> rdd.map(lambda x: 2 * x).cache().map(lambda x: 2 * x).collect()

| [4, 8, 12, 16]

| >>> rdd.map(lambda x: 2 * x).map(lambda x: 2 * x).collect()

| [4, 8, 12, 16]

|

| Pipelined reduces:

|

| >>> from operator import add

| >>> rdd.map(lambda x: 2 * x).reduce(add)

| 20

| >>> rdd.flatMap(lambda x: [x, x]).reduce(add)

| 20

|

| Method resolution order:

| PipelinedRDD

| RDD

| typing.Generic

| builtins.object

|

| Methods defined here:

|

| __init__(self, prev: pyspark.rdd.RDD[~T], func: Callable[[int, Iterable[~T]], Iterable[~U]], preservesPartitioning: bool = False, isFromBarrier: bool = False)

| Initialize self. See help(type(self)) for accurate signature.

|

| getNumPartitions(self) -> int

| Returns the number of partitions in RDD

|

| .. versionadded:: 1.1.0

|

| Returns

| -------

| int

| number of partitions

|

| Examples

| --------

| >>> rdd = sc.parallelize([1, 2, 3, 4], 2)

| >>> rdd.getNumPartitions()

| 2

|

| id(self) -> int

| A unique ID for this RDD (within its SparkContext).

|

| .. versionadded:: 0.7.0

|

| Returns

| -------

| int

| The unique ID for this :class:`RDD`

|

| Examples

| --------

| >>> rdd = sc.range(5)

| >>> rdd.id() # doctest: +SKIP

| 3

|

| ----------------------------------------------------------------------

| Data and other attributes defined here:

|

| __annotations__ = {}

|

| __orig_bases__ = (pyspark.rdd.RDD[~U], typing.Generic[~T, ~U])

|

| __parameters__ = (~T, ~U)

|

| ----------------------------------------------------------------------

| Methods inherited from RDD:

|

| __add__(self: 'RDD[T]', other: 'RDD[U]') -> 'RDD[Union[T, U]]'

| Return the union of this RDD and another one.

|

| Examples

| --------

| >>> rdd = sc.parallelize([1, 1, 2, 3])

| >>> (rdd + rdd).collect()

| [1, 1, 2, 3, 1, 1, 2, 3]

|

| __getnewargs__(self) -> NoReturn

|

| __repr__(self) -> str

| Return repr(self).

|

| aggregate(self: 'RDD[T]', zeroValue: ~U, seqOp: Callable[[~U, ~T], ~U], combOp: Callable[[~U, ~U], ~U]) -> ~U

| Aggregate the elements of each partition, and then the results for all

| the partitions, using a given combine functions and a neutral "zero

| value."

|

| The functions ``op(t1, t2)`` is allowed to modify ``t1`` and return it

| as its result value to avoid object allocation; however, it should not

| modify ``t2``.

|

| The first function (seqOp) can return a different result type, U, than

| the type of this RDD. Thus, we need one operation for merging a T into

| an U and one operation for merging two U

|

| .. versionadded:: 1.1.0

|

| Parameters

| ----------

| zeroValue : U

| the initial value for the accumulated result of each partition

| seqOp : function

| a function used to accumulate results within a partition

| combOp : function

| an associative function used to combine results from different partitions

|

| Returns

| -------

| U

| the aggregated result

|

| See Also

| --------

| :meth:`RDD.reduce`

| :meth:`RDD.fold`

|

| Examples

| --------

| >>> seqOp = (lambda x, y: (x[0] + y, x[1] + 1))

| >>> combOp = (lambda x, y: (x[0] + y[0], x[1] + y[1]))

| >>> sc.parallelize([1, 2, 3, 4]).aggregate((0, 0), seqOp, combOp)

| (10, 4)

| >>> sc.parallelize([]).aggregate((0, 0), seqOp, combOp)

| (0, 0)

|

| aggregateByKey(self: 'RDD[Tuple[K, V]]', zeroValue: ~U, seqFunc: Callable[[~U, ~V], ~U], combFunc: Callable[[~U, ~U], ~U], numPartitions: Optional[int] = None, partitionFunc: Callable[[~K], int] = <function portable_hash at 0x7d0ad45511c0>) -> 'RDD[Tuple[K, U]]'

| Aggregate the values of each key, using given combine functions and a neutral

| "zero value". This function can return a different result type, U, than the type

| of the values in this RDD, V. Thus, we need one operation for merging a V into

| a U and one operation for merging two U's, The former operation is used for merging

| values within a partition, and the latter is used for merging values between

| partitions. To avoid memory allocation, both of these functions are

| allowed to modify and return their first argument instead of creating a new U.

|

| .. versionadded:: 1.1.0

|

| Parameters

| ----------

| zeroValue : U

| the initial value for the accumulated result of each partition

| seqFunc : function

| a function to merge a V into a U

| combFunc : function

| a function to combine two U's into a single one

| numPartitions : int, optional

| the number of partitions in new :class:`RDD`

| partitionFunc : function, optional, default `portable_hash`

| function to compute the partition index

|

| Returns

| -------

| :class:`RDD`

| a :class:`RDD` containing the keys and the aggregated result for each key

|

| See Also

| --------

| :meth:`RDD.reduceByKey`

| :meth:`RDD.combineByKey`

| :meth:`RDD.foldByKey`

| :meth:`RDD.groupByKey`

|

| Examples

| --------

| >>> rdd = sc.parallelize([("a", 1), ("b", 1), ("a", 2)])

| >>> seqFunc = (lambda x, y: (x[0] + y, x[1] + 1))

| >>> combFunc = (lambda x, y: (x[0] + y[0], x[1] + y[1]))

| >>> sorted(rdd.aggregateByKey((0, 0), seqFunc, combFunc).collect())

| [('a', (3, 2)), ('b', (1, 1))]

|

| barrier(self: 'RDD[T]') -> 'RDDBarrier[T]'

| Marks the current stage as a barrier stage, where Spark must launch all tasks together.

| In case of a task failure, instead of only restarting the failed task, Spark will abort the

| entire stage and relaunch all tasks for this stage.

| The barrier execution mode feature is experimental and it only handles limited scenarios.

| Please read the linked SPIP and design docs to understand the limitations and future plans.

|

| .. versionadded:: 2.4.0

|

| Returns

| -------

| :class:`RDDBarrier`

| instance that provides actions within a barrier stage.

|

| See Also

| --------

| :class:`pyspark.BarrierTaskContext`

|

| Notes

| -----

| For additional information see

|

| - `SPIP: Barrier Execution Mode <https://issues.apache.org/jira/browse/SPARK-24374>`_

| - `Design Doc <https://issues.apache.org/jira/browse/SPARK-24582>`_

|

| This API is experimental

|

| cache(self: 'RDD[T]') -> 'RDD[T]'

| Persist this RDD with the default storage level (`MEMORY_ONLY`).

|

| .. versionadded:: 0.7.0

|

| Returns

| -------

| :class:`RDD`

| The same :class:`RDD` with storage level set to `MEMORY_ONLY`

|

| See Also

| --------

| :meth:`RDD.persist`

| :meth:`RDD.unpersist`

| :meth:`RDD.getStorageLevel`

|

| Examples

| --------

| >>> rdd = sc.range(5)

| >>> rdd2 = rdd.cache()

| >>> rdd2 is rdd

| True

| >>> str(rdd.getStorageLevel())

| 'Memory Serialized 1x Replicated'

| >>> _ = rdd.unpersist()

|

| cartesian(self: 'RDD[T]', other: 'RDD[U]') -> 'RDD[Tuple[T, U]]'

| Return the Cartesian product of this RDD and another one, that is, the

| RDD of all pairs of elements ``(a, b)`` where ``a`` is in `self` and

| ``b`` is in `other`.

|

| .. versionadded:: 0.7.0

|

| Parameters

| ----------

| other : :class:`RDD`

| another :class:`RDD`

|

| Returns

| -------

| :class:`RDD`

| the Cartesian product of this :class:`RDD` and another one

|

| See Also

| --------

| :meth:`pyspark.sql.DataFrame.crossJoin`

|

| Examples

| --------

| >>> rdd = sc.parallelize([1, 2])

| >>> sorted(rdd.cartesian(rdd).collect())

| [(1, 1), (1, 2), (2, 1), (2, 2)]

|

| checkpoint(self) -> None

| Mark this RDD for checkpointing. It will be saved to a file inside the

| checkpoint directory set with :meth:`SparkContext.setCheckpointDir` and

| all references to its parent RDDs will be removed. This function must

| be called before any job has been executed on this RDD. It is strongly

| recommended that this RDD is persisted in memory, otherwise saving it

| on a file will require recomputation.

|

| .. versionadded:: 0.7.0

|

| See Also

| --------

| :meth:`RDD.isCheckpointed`

| :meth:`RDD.getCheckpointFile`

| :meth:`RDD.localCheckpoint`

| :meth:`SparkContext.setCheckpointDir`

| :meth:`SparkContext.getCheckpointDir`

|

| Examples

| --------

| >>> rdd = sc.range(5)

| >>> rdd.is_checkpointed

| False

| >>> rdd.getCheckpointFile() == None

| True

|

| >>> rdd.checkpoint()

| >>> rdd.is_checkpointed

| True

| >>> rdd.getCheckpointFile() == None

| True

|

| >>> rdd.count()

| 5

| >>> rdd.is_checkpointed

| True

| >>> rdd.getCheckpointFile() == None

| False

|

| cleanShuffleDependencies(self, blocking: bool = False) -> None

| Removes an RDD's shuffles and it's non-persisted ancestors.

|

| When running without a shuffle service, cleaning up shuffle files enables downscaling.

| If you use the RDD after this call, you should checkpoint and materialize it first.

|

| .. versionadded:: 3.3.0

|

| Parameters

| ----------

| blocking : bool, optional, default False

| whether to block on shuffle cleanup tasks

|

| Notes

| -----

| This API is a developer API.

|

| coalesce(self: 'RDD[T]', numPartitions: int, shuffle: bool = False) -> 'RDD[T]'

| Return a new RDD that is reduced into `numPartitions` partitions.

|

| .. versionadded:: 1.0.0

|

| Parameters

| ----------

| numPartitions : int, optional

| the number of partitions in new :class:`RDD`

| shuffle : bool, optional, default False

| whether to add a shuffle step

|

| Returns

| -------

| :class:`RDD`

| a :class:`RDD` that is reduced into `numPartitions` partitions

|

| See Also

| --------

| :meth:`RDD.repartition`

|

| Examples

| --------

| >>> sc.parallelize([1, 2, 3, 4, 5], 3).glom().collect()

| [[1], [2, 3], [4, 5]]

| >>> sc.parallelize([1, 2, 3, 4, 5], 3).coalesce(1).glom().collect()

| [[1, 2, 3, 4, 5]]

|

| cogroup(self: 'RDD[Tuple[K, V]]', other: 'RDD[Tuple[K, U]]', numPartitions: Optional[int] = None) -> 'RDD[Tuple[K, Tuple[ResultIterable[V], ResultIterable[U]]]]'

| For each key k in `self` or `other`, return a resulting RDD that

| contains a tuple with the list of values for that key in `self` as

| well as `other`.

|

| .. versionadded:: 0.7.0

|

| Parameters

| ----------

| other : :class:`RDD`

| another :class:`RDD`

|

| Returns

| -------

| :class:`RDD`

| a :class:`RDD` containing the keys and cogrouped values

|

| See Also

| --------

| :meth:`RDD.groupWith`

| :meth:`RDD.join`

|

| Examples

| --------

| >>> rdd1 = sc.parallelize([("a", 1), ("b", 4)])

| >>> rdd2 = sc.parallelize([("a", 2)])

| >>> [(x, tuple(map(list, y))) for x, y in sorted(list(rdd1.cogroup(rdd2).collect()))]

| [('a', ([1], [2])), ('b', ([4], []))]

|

| collect(self: 'RDD[T]') -> List[~T]

| Return a list that contains all the elements in this RDD.

|

| .. versionadded:: 0.7.0

|

| Returns

| -------

| list

| a list containing all the elements

|

| Notes

| -----

| This method should only be used if the resulting array is expected

| to be small, as all the data is loaded into the driver's memory.

|

| See Also

| --------

| :meth:`RDD.toLocalIterator`

| :meth:`pyspark.sql.DataFrame.collect`

|

| Examples

| --------

| >>> sc.range(5).collect()

| [0, 1, 2, 3, 4]

| >>> sc.parallelize(["x", "y", "z"]).collect()

| ['x', 'y', 'z']

|

| collectAsMap(self: 'RDD[Tuple[K, V]]') -> Dict[~K, ~V]

| Return the key-value pairs in this RDD to the master as a dictionary.

|

| .. versionadded:: 0.7.0

|

| Returns

| -------

| :class:`dict`

| a dictionary of (key, value) pairs

|

| See Also

| --------

| :meth:`RDD.countByValue`

|

| Notes

| -----

| This method should only be used if the resulting data is expected

| to be small, as all the data is loaded into the driver's memory.

|

| Examples

| --------

| >>> m = sc.parallelize([(1, 2), (3, 4)]).collectAsMap()

| >>> m[1]

| 2

| >>> m[3]

| 4

|

| collectWithJobGroup(self: 'RDD[T]', groupId: str, description: str, interruptOnCancel: bool = False) -> 'List[T]'

| When collect rdd, use this method to specify job group.

|

| .. versionadded:: 3.0.0

|

| .. deprecated:: 3.1.0

| Use :class:`pyspark.InheritableThread` with the pinned thread mode enabled.

|

| Parameters

| ----------

| groupId : str

| The group ID to assign.

| description : str

| The description to set for the job group.

| interruptOnCancel : bool, optional, default False

| whether to interrupt jobs on job cancellation.

|

| Returns

| -------

| list

| a list containing all the elements

|

| See Also

| --------

| :meth:`RDD.collect`

| :meth:`SparkContext.setJobGroup`

|

| combineByKey(self: 'RDD[Tuple[K, V]]', createCombiner: Callable[[~V], ~U], mergeValue: Callable[[~U, ~V], ~U], mergeCombiners: Callable[[~U, ~U], ~U], numPartitions: Optional[int] = None, partitionFunc: Callable[[~K], int] = <function portable_hash at 0x7d0ad45511c0>) -> 'RDD[Tuple[K, U]]'

| Generic function to combine the elements for each key using a custom

| set of aggregation functions.

|

| Turns an RDD[(K, V)] into a result of type RDD[(K, C)], for a "combined

| type" C.

|

| To avoid memory allocation, both mergeValue and mergeCombiners are allowed to

| modify and return their first argument instead of creating a new C.

|

| In addition, users can control the partitioning of the output RDD.

|

| .. versionadded:: 0.7.0

|

| Parameters

| ----------

| createCombiner : function

| a function to turns a V into a C

| mergeValue : function

| a function to merge a V into a C

| mergeCombiners : function

| a function to combine two C's into a single one

| numPartitions : int, optional

| the number of partitions in new :class:`RDD`

| partitionFunc : function, optional, default `portable_hash`

| function to compute the partition index

|

| Returns

| -------

| :class:`RDD`

| a :class:`RDD` containing the keys and the aggregated result for each key

|

| See Also

| --------

| :meth:`RDD.reduceByKey`

| :meth:`RDD.aggregateByKey`

| :meth:`RDD.foldByKey`

| :meth:`RDD.groupByKey`

|

| Notes

| -----

| V and C can be different -- for example, one might group an RDD of type

| (Int, Int) into an RDD of type (Int, List[Int]).

|

| Examples

| --------

| >>> rdd = sc.parallelize([("a", 1), ("b", 1), ("a", 2)])

| >>> def to_list(a):

| ... return [a]

| ...

| >>> def append(a, b):

| ... a.append(b)

| ... return a

| ...

| >>> def extend(a, b):

| ... a.extend(b)

| ... return a

| ...

| >>> sorted(rdd.combineByKey(to_list, append, extend).collect())

| [('a', [1, 2]), ('b', [1])]

|

| count(self) -> int

| Return the number of elements in this RDD.

|

| .. versionadded:: 0.7.0

|

| Returns

| -------

| int

| the number of elements

|

| See Also

| --------

| :meth:`RDD.countApprox`

| :meth:`pyspark.sql.DataFrame.count`

|

| Examples

| --------

| >>> sc.parallelize([2, 3, 4]).count()

| 3

|

| countApprox(self, timeout: int, confidence: float = 0.95) -> int

| Approximate version of count() that returns a potentially incomplete

| result within a timeout, even if not all tasks have finished.

|

| .. versionadded:: 1.2.0

|

| Parameters

| ----------

| timeout : int

| maximum time to wait for the job, in milliseconds

| confidence : float

| the desired statistical confidence in the result

|

| Returns

| -------

| int

| a potentially incomplete result, with error bounds

|

| See Also

| --------

| :meth:`RDD.count`

|

| Examples

| --------

| >>> rdd = sc.parallelize(range(1000), 10)

| >>> rdd.countApprox(1000, 1.0)

| 1000

|

| countApproxDistinct(self: 'RDD[T]', relativeSD: float = 0.05) -> int

| Return approximate number of distinct elements in the RDD.

|

| .. versionadded:: 1.2.0

|

| Parameters

| ----------

| relativeSD : float, optional

| Relative accuracy. Smaller values create

| counters that require more space.

| It must be greater than 0.000017.

|

| Returns

| -------

| int

| approximate number of distinct elements

|

| See Also

| --------

| :meth:`RDD.distinct`

|

| Notes

| -----

| The algorithm used is based on streamlib's implementation of

| `"HyperLogLog in Practice: Algorithmic Engineering of a State

| of The Art Cardinality Estimation Algorithm", available here

| <https://doi.org/10.1145/2452376.2452456>`_.

|

| Examples

| --------

| >>> n = sc.parallelize(range(1000)).map(str).countApproxDistinct()

| >>> 900 < n < 1100

| True

| >>> n = sc.parallelize([i % 20 for i in range(1000)]).countApproxDistinct()

| >>> 16 < n < 24

| True

|

| countByKey(self: 'RDD[Tuple[K, V]]') -> Dict[~K, int]

| Count the number of elements for each key, and return the result to the

| master as a dictionary.

|

| .. versionadded:: 0.7.0

|

| Returns

| -------

| dict

| a dictionary of (key, count) pairs

|

| See Also

| --------

| :meth:`RDD.collectAsMap`

| :meth:`RDD.countByValue`

|

| Examples

| --------

| >>> rdd = sc.parallelize([("a", 1), ("b", 1), ("a", 1)])

| >>> sorted(rdd.countByKey().items())

| [('a', 2), ('b', 1)]

|

| countByValue(self: 'RDD[K]') -> Dict[~K, int]

| Return the count of each unique value in this RDD as a dictionary of

| (value, count) pairs.

|

| .. versionadded:: 0.7.0

|

| Returns

| -------

| dict

| a dictionary of (value, count) pairs

|

| See Also

| --------

| :meth:`RDD.collectAsMap`

| :meth:`RDD.countByKey`

|

| Examples

| --------

| >>> sorted(sc.parallelize([1, 2, 1, 2, 2], 2).countByValue().items())

| [(1, 2), (2, 3)]

|

| distinct(self: 'RDD[T]', numPartitions: Optional[int] = None) -> 'RDD[T]'

| Return a new RDD containing the distinct elements in this RDD.

|

| .. versionadded:: 0.7.0

|

| Parameters

| ----------

| numPartitions : int, optional

| the number of partitions in new :class:`RDD`

|

| Returns

| -------

| :class:`RDD`

| a new :class:`RDD` containing the distinct elements

|

| See Also

| --------

| :meth:`RDD.countApproxDistinct`

|

| Examples

| --------

| >>> sorted(sc.parallelize([1, 1, 2, 3]).distinct().collect())

| [1, 2, 3]

|

| filter(self: 'RDD[T]', f: Callable[[~T], bool]) -> 'RDD[T]'

| Return a new RDD containing only the elements that satisfy a predicate.

|

| .. versionadded:: 0.7.0

|

| Parameters

| ----------

| f : function

| a function to run on each element of the RDD

|

| Returns

| -------

| :class:`RDD`

| a new :class:`RDD` by applying a function to each element

|

| See Also

| --------

| :meth:`RDD.map`

|

| Examples

| --------

| >>> rdd = sc.parallelize([1, 2, 3, 4, 5])

| >>> rdd.filter(lambda x: x % 2 == 0).collect()

| [2, 4]

|

| first(self: 'RDD[T]') -> ~T

| Return the first element in this RDD.

|

| .. versionadded:: 0.7.0

|

| Returns

| -------

| T

| the first element

|

| See Also

| --------

| :meth:`RDD.take`

| :meth:`pyspark.sql.DataFrame.first`

| :meth:`pyspark.sql.DataFrame.head`

|

| Examples

| --------

| >>> sc.parallelize([2, 3, 4]).first()

| 2

| >>> sc.parallelize([]).first()

| Traceback (most recent call last):

| ...

| ValueError: RDD is empty

|

| flatMap(self: 'RDD[T]', f: Callable[[~T], Iterable[~U]], preservesPartitioning: bool = False) -> 'RDD[U]'

| Return a new RDD by first applying a function to all elements of this

| RDD, and then flattening the results.

|

| .. versionadded:: 0.7.0

|

| Parameters

| ----------

| f : function

| a function to turn a T into a sequence of U

| preservesPartitioning : bool, optional, default False

| indicates whether the input function preserves the partitioner,

| which should be False unless this is a pair RDD and the input

|

| Returns

| -------

| :class:`RDD`

| a new :class:`RDD` by applying a function to all elements

|

| See Also

| --------

| :meth:`RDD.map`

| :meth:`RDD.mapPartitions`

| :meth:`RDD.mapPartitionsWithIndex`

| :meth:`RDD.mapPartitionsWithSplit`

|

| Examples

| --------

| >>> rdd = sc.parallelize([2, 3, 4])

| >>> sorted(rdd.flatMap(lambda x: range(1, x)).collect())

| [1, 1, 1, 2, 2, 3]

| >>> sorted(rdd.flatMap(lambda x: [(x, x), (x, x)]).collect())

| [(2, 2), (2, 2), (3, 3), (3, 3), (4, 4), (4, 4)]

|

| flatMapValues(self: 'RDD[Tuple[K, V]]', f: Callable[[~V], Iterable[~U]]) -> 'RDD[Tuple[K, U]]'

| Pass each value in the key-value pair RDD through a flatMap function

| without changing the keys; this also retains the original RDD's

| partitioning.

|

| .. versionadded:: 0.7.0

|

| Parameters

| ----------

| f : function

| a function to turn a V into a sequence of U

|

| Returns

| -------

| :class:`RDD`

| a :class:`RDD` containing the keys and the flat-mapped value

|

| See Also

| --------

| :meth:`RDD.flatMap`

| :meth:`RDD.mapValues`

|

| Examples

| --------

| >>> rdd = sc.parallelize([("a", ["x", "y", "z"]), ("b", ["p", "r"])])

| >>> def f(x): return x

| ...

| >>> rdd.flatMapValues(f).collect()

| [('a', 'x'), ('a', 'y'), ('a', 'z'), ('b', 'p'), ('b', 'r')]

|

| fold(self: 'RDD[T]', zeroValue: ~T, op: Callable[[~T, ~T], ~T]) -> ~T

| Aggregate the elements of each partition, and then the results for all

| the partitions, using a given associative function and a neutral "zero value."

|

| The function ``op(t1, t2)`` is allowed to modify ``t1`` and return it

| as its result value to avoid object allocation; however, it should not

| modify ``t2``.

|

| This behaves somewhat differently from fold operations implemented

| for non-distributed collections in functional languages like Scala.

| This fold operation may be applied to partitions individually, and then

| fold those results into the final result, rather than apply the fold

| to each element sequentially in some defined ordering. For functions

| that are not commutative, the result may differ from that of a fold

| applied to a non-distributed collection.

|

| .. versionadded:: 0.7.0

|

| Parameters

| ----------

| zeroValue : T

| the initial value for the accumulated result of each partition

| op : function

| a function used to both accumulate results within a partition and combine

| results from different partitions

|

| Returns

| -------

| T

| the aggregated result

|

| See Also

| --------

| :meth:`RDD.reduce`

| :meth:`RDD.aggregate`

|

| Examples

| --------

| >>> from operator import add

| >>> sc.parallelize([1, 2, 3, 4, 5]).fold(0, add)

| 15

|

| foldByKey(self: 'RDD[Tuple[K, V]]', zeroValue: ~V, func: Callable[[~V, ~V], ~V], numPartitions: Optional[int] = None, partitionFunc: Callable[[~K], int] = <function portable_hash at 0x7d0ad45511c0>) -> 'RDD[Tuple[K, V]]'

| Merge the values for each key using an associative function "func"

| and a neutral "zeroValue" which may be added to the result an

| arbitrary number of times, and must not change the result

| (e.g., 0 for addition, or 1 for multiplication.).

|

| .. versionadded:: 1.1.0

|

| Parameters

| ----------

| zeroValue : V

| the initial value for the accumulated result of each partition

| func : function

| a function to combine two V's into a single one

| numPartitions : int, optional

| the number of partitions in new :class:`RDD`

| partitionFunc : function, optional, default `portable_hash`

| function to compute the partition index

|

| Returns

| -------

| :class:`RDD`

| a :class:`RDD` containing the keys and the aggregated result for each key

|

| See Also

| --------

| :meth:`RDD.reduceByKey`

| :meth:`RDD.combineByKey`

| :meth:`RDD.aggregateByKey`

| :meth:`RDD.groupByKey`

|

| Examples

| --------

| >>> rdd = sc.parallelize([("a", 1), ("b", 1), ("a", 1)])

| >>> from operator import add

| >>> sorted(rdd.foldByKey(0, add).collect())

| [('a', 2), ('b', 1)]

|

| foreach(self: 'RDD[T]', f: Callable[[~T], NoneType]) -> None

| Applies a function to all elements of this RDD.

|

| .. versionadded:: 0.7.0

|

| Parameters

| ----------

| f : function

| a function applied to each element

|

| See Also

| --------

| :meth:`RDD.foreachPartition`

| :meth:`pyspark.sql.DataFrame.foreach`

| :meth:`pyspark.sql.DataFrame.foreachPartition`

|

| Examples

| --------

| >>> def f(x): print(x)

| ...

| >>> sc.parallelize([1, 2, 3, 4, 5]).foreach(f)

|

| foreachPartition(self: 'RDD[T]', f: Callable[[Iterable[~T]], NoneType]) -> None

| Applies a function to each partition of this RDD.

|

| .. versionadded:: 1.0.0

|

| Parameters

| ----------

| f : function

| a function applied to each partition

|

| See Also

| --------

| :meth:`RDD.foreach`

| :meth:`pyspark.sql.DataFrame.foreach`

| :meth:`pyspark.sql.DataFrame.foreachPartition`

|

| Examples

| --------

| >>> def f(iterator):

| ... for x in iterator:

| ... print(x)

| ...

| >>> sc.parallelize([1, 2, 3, 4, 5]).foreachPartition(f)

|

| fullOuterJoin(self: 'RDD[Tuple[K, V]]', other: 'RDD[Tuple[K, U]]', numPartitions: Optional[int] = None) -> 'RDD[Tuple[K, Tuple[Optional[V], Optional[U]]]]'

| Perform a right outer join of `self` and `other`.

|

| For each element (k, v) in `self`, the resulting RDD will either

| contain all pairs (k, (v, w)) for w in `other`, or the pair

| (k, (v, None)) if no elements in `other` have key k.

|

| Similarly, for each element (k, w) in `other`, the resulting RDD will

| either contain all pairs (k, (v, w)) for v in `self`, or the pair

| (k, (None, w)) if no elements in `self` have key k.

|

| Hash-partitions the resulting RDD into the given number of partitions.

|

| .. versionadded:: 1.2.0

|

| Parameters

| ----------

| other : :class:`RDD`

| another :class:`RDD`

| numPartitions : int, optional

| the number of partitions in new :class:`RDD`

|

| Returns

| -------

| :class:`RDD`

| a :class:`RDD` containing all pairs of elements with matching keys

|

| See Also

| --------

| :meth:`RDD.join`

| :meth:`RDD.leftOuterJoin`

| :meth:`RDD.fullOuterJoin`

| :meth:`pyspark.sql.DataFrame.join`

|

| Examples

| --------

| >>> rdd1 = sc.parallelize([("a", 1), ("b", 4)])

| >>> rdd2 = sc.parallelize([("a", 2), ("c", 8)])

| >>> sorted(rdd1.fullOuterJoin(rdd2).collect())

| [('a', (1, 2)), ('b', (4, None)), ('c', (None, 8))]

|

| getCheckpointFile(self) -> Optional[str]

| Gets the name of the file to which this RDD was checkpointed

|

| Not defined if RDD is checkpointed locally.

|

| .. versionadded:: 0.7.0

|

| Returns

| -------

| str

| the name of the file to which this :class:`RDD` was checkpointed

|

| See Also

| --------

| :meth:`RDD.checkpoint`

| :meth:`SparkContext.setCheckpointDir`

| :meth:`SparkContext.getCheckpointDir`

|

| getResourceProfile(self) -> Optional[pyspark.resource.profile.ResourceProfile]

| Get the :class:`pyspark.resource.ResourceProfile` specified with this RDD or None

| if it wasn't specified.

|

| .. versionadded:: 3.1.0

|

| Returns

| -------

| class:`pyspark.resource.ResourceProfile`

| The user specified profile or None if none were specified

|

| See Also

| --------

| :meth:`RDD.withResources`

|

| Notes

| -----

| This API is experimental

|

| getStorageLevel(self) -> pyspark.storagelevel.StorageLevel

| Get the RDD's current storage level.

|

| .. versionadded:: 1.0.0

|

| Returns

| -------

| :class:`StorageLevel`

| current :class:`StorageLevel`

|

| See Also

| --------

| :meth:`RDD.name`

|

| Examples

| --------

| >>> rdd = sc.parallelize([1,2])

| >>> rdd.getStorageLevel()

| StorageLevel(False, False, False, False, 1)

| >>> print(rdd.getStorageLevel())

| Serialized 1x Replicated

|

| glom(self: 'RDD[T]') -> 'RDD[List[T]]'

| Return an RDD created by coalescing all elements within each partition

| into a list.

|

| .. versionadded:: 0.7.0

|

| Returns

| -------

| :class:`RDD`

| a new :class:`RDD` coalescing all elements within each partition into a list

|

| Examples

| --------

| >>> rdd = sc.parallelize([1, 2, 3, 4], 2)

| >>> sorted(rdd.glom().collect())

| [[1, 2], [3, 4]]

|

| groupBy(self: 'RDD[T]', f: Callable[[~T], ~K], numPartitions: Optional[int] = None, partitionFunc: Callable[[~K], int] = <function portable_hash at 0x7d0ad45511c0>) -> 'RDD[Tuple[K, Iterable[T]]]'

| Return an RDD of grouped items.

|

| .. versionadded:: 0.7.0

|

| Parameters

| ----------

| f : function

| a function to compute the key

| numPartitions : int, optional

| the number of partitions in new :class:`RDD`

| partitionFunc : function, optional, default `portable_hash`

| a function to compute the partition index

|

| Returns

| -------

| :class:`RDD`

| a new :class:`RDD` of grouped items

|

| See Also

| --------

| :meth:`RDD.groupByKey`

| :meth:`pyspark.sql.DataFrame.groupBy`

|

| Examples

| --------

| >>> rdd = sc.parallelize([1, 1, 2, 3, 5, 8])

| >>> result = rdd.groupBy(lambda x: x % 2).collect()

| >>> sorted([(x, sorted(y)) for (x, y) in result])

| [(0, [2, 8]), (1, [1, 1, 3, 5])]

|

| groupByKey(self: 'RDD[Tuple[K, V]]', numPartitions: Optional[int] = None, partitionFunc: Callable[[~K], int] = <function portable_hash at 0x7d0ad45511c0>) -> 'RDD[Tuple[K, Iterable[V]]]'

| Group the values for each key in the RDD into a single sequence.

| Hash-partitions the resulting RDD with numPartitions partitions.

|

| .. versionadded:: 0.7.0

|

| Parameters

| ----------

| numPartitions : int, optional

| the number of partitions in new :class:`RDD`

| partitionFunc : function, optional, default `portable_hash`

| function to compute the partition index

|

| Returns

| -------

| :class:`RDD`

| a :class:`RDD` containing the keys and the grouped result for each key

|

| See Also

| --------

| :meth:`RDD.reduceByKey`

| :meth:`RDD.combineByKey`

| :meth:`RDD.aggregateByKey`

| :meth:`RDD.foldByKey`

|

| Notes

| -----

| If you are grouping in order to perform an aggregation (such as a

| sum or average) over each key, using reduceByKey or aggregateByKey will

| provide much better performance.

|

| Examples

| --------

| >>> rdd = sc.parallelize([("a", 1), ("b", 1), ("a", 1)])

| >>> sorted(rdd.groupByKey().mapValues(len).collect())

| [('a', 2), ('b', 1)]

| >>> sorted(rdd.groupByKey().mapValues(list).collect())

| [('a', [1, 1]), ('b', [1])]

|

| groupWith(self: 'RDD[Tuple[Any, Any]]', other: 'RDD[Tuple[Any, Any]]', *others: 'RDD[Tuple[Any, Any]]') -> 'RDD[Tuple[Any, Tuple[ResultIterable[Any], ...]]]'

| Alias for cogroup but with support for multiple RDDs.

|

| .. versionadded:: 0.7.0

|

| Parameters

| ----------

| other : :class:`RDD`

| another :class:`RDD`

| others : :class:`RDD`

| other :class:`RDD`\s

|

| Returns

| -------

| :class:`RDD`

| a :class:`RDD` containing the keys and cogrouped values

|

| See Also

| --------

| :meth:`RDD.cogroup`

| :meth:`RDD.join`

|

| Examples

| --------

| >>> rdd1 = sc.parallelize([("a", 5), ("b", 6)])

| >>> rdd2 = sc.parallelize([("a", 1), ("b", 4)])

| >>> rdd3 = sc.parallelize([("a", 2)])

| >>> rdd4 = sc.parallelize([("b", 42)])

| >>> [(x, tuple(map(list, y))) for x, y in

| ... sorted(list(rdd1.groupWith(rdd2, rdd3, rdd4).collect()))]

| [('a', ([5], [1], [2], [])), ('b', ([6], [4], [], [42]))]

|

| histogram(self: 'RDD[S]', buckets: Union[int, List[ForwardRef('S')], Tuple[ForwardRef('S'), ...]]) -> Tuple[Sequence[ForwardRef('S')], List[int]]

| Compute a histogram using the provided buckets. The buckets

| are all open to the right except for the last which is closed.

| e.g. [1,10,20,50] means the buckets are [1,10) [10,20) [20,50],

| which means 1<=x<10, 10<=x<20, 20<=x<=50. And on the input of 1

| and 50 we would have a histogram of 1,0,1.

|

| If your histogram is evenly spaced (e.g. [0, 10, 20, 30]),

| this can be switched from an O(log n) insertion to O(1) per

| element (where n is the number of buckets).

|

| Buckets must be sorted, not contain any duplicates, and have

| at least two elements.

|

| If `buckets` is a number, it will generate buckets which are

| evenly spaced between the minimum and maximum of the RDD. For

| example, if the min value is 0 and the max is 100, given `buckets`

| as 2, the resulting buckets will be [0,50) [50,100]. `buckets` must

| be at least 1. An exception is raised if the RDD contains infinity.

| If the elements in the RDD do not vary (max == min), a single bucket

| will be used.

|

| .. versionadded:: 1.2.0

|

| Parameters

| ----------

| buckets : int, or list, or tuple

| if `buckets` is a number, it computes a histogram of the data using

| `buckets` number of buckets evenly, otherwise, `buckets` is the provided

| buckets to bin the data.

|

| Returns

| -------

| tuple

| a tuple of buckets and histogram

|

| See Also

| --------

| :meth:`RDD.stats`

|

| Examples

| --------

| >>> rdd = sc.parallelize(range(51))

| >>> rdd.histogram(2)

| ([0, 25, 50], [25, 26])

| >>> rdd.histogram([0, 5, 25, 50])

| ([0, 5, 25, 50], [5, 20, 26])

| >>> rdd.histogram([0, 15, 30, 45, 60]) # evenly spaced buckets

| ([0, 15, 30, 45, 60], [15, 15, 15, 6])

| >>> rdd = sc.parallelize(["ab", "ac", "b", "bd", "ef"])

| >>> rdd.histogram(("a", "b", "c"))

| (('a', 'b', 'c'), [2, 2])

|

| intersection(self: 'RDD[T]', other: 'RDD[T]') -> 'RDD[T]'

| Return the intersection of this RDD and another one. The output will

| not contain any duplicate elements, even if the input RDDs did.

|

| .. versionadded:: 1.0.0

|

| Parameters

| ----------

| other : :class:`RDD`

| another :class:`RDD`

|

| Returns

| -------

| :class:`RDD`

| the intersection of this :class:`RDD` and another one

|

| See Also

| --------

| :meth:`pyspark.sql.DataFrame.intersect`

|

| Notes

| -----

| This method performs a shuffle internally.

|

| Examples

| --------

| >>> rdd1 = sc.parallelize([1, 10, 2, 3, 4, 5])

| >>> rdd2 = sc.parallelize([1, 6, 2, 3, 7, 8])

| >>> rdd1.intersection(rdd2).collect()

| [1, 2, 3]

|

| isCheckpointed(self) -> bool

| Return whether this RDD is checkpointed and materialized, either reliably or locally.

|

| .. versionadded:: 0.7.0

|

| Returns

| -------

| bool

| whether this :class:`RDD` is checkpointed and materialized, either reliably or locally

|

| See Also

| --------

| :meth:`RDD.checkpoint`

| :meth:`RDD.getCheckpointFile`

| :meth:`SparkContext.setCheckpointDir`

| :meth:`SparkContext.getCheckpointDir`

|

| isEmpty(self) -> bool

| Returns true if and only if the RDD contains no elements at all.

|

| .. versionadded:: 1.3.0

|

| Returns

| -------

| bool

| whether the :class:`RDD` is empty

|

| See Also

| --------

| :meth:`RDD.first`

| :meth:`pyspark.sql.DataFrame.isEmpty`

|

| Notes

| -----

| An RDD may be empty even when it has at least 1 partition.

|

| Examples

| --------

| >>> sc.parallelize([]).isEmpty()

| True

| >>> sc.parallelize([1]).isEmpty()

| False

|

| isLocallyCheckpointed(self) -> bool

| Return whether this RDD is marked for local checkpointing.

|

| Exposed for testing.

|

| .. versionadded:: 2.2.0

|

| Returns

| -------

| bool

| whether this :class:`RDD` is marked for local checkpointing

|

| See Also

| --------

| :meth:`RDD.localCheckpoint`

|

| join(self: 'RDD[Tuple[K, V]]', other: 'RDD[Tuple[K, U]]', numPartitions: Optional[int] = None) -> 'RDD[Tuple[K, Tuple[V, U]]]'

| Return an RDD containing all pairs of elements with matching keys in

| `self` and `other`.

|

| Each pair of elements will be returned as a (k, (v1, v2)) tuple, where

| (k, v1) is in `self` and (k, v2) is in `other`.

|

| Performs a hash join across the cluster.

|

| .. versionadded:: 0.7.0

|

| Parameters

| ----------

| other : :class:`RDD`

| another :class:`RDD`

| numPartitions : int, optional

| the number of partitions in new :class:`RDD`

|

| Returns

| -------

| :class:`RDD`

| a :class:`RDD` containing all pairs of elements with matching keys

|

| See Also

| --------

| :meth:`RDD.leftOuterJoin`

| :meth:`RDD.rightOuterJoin`

| :meth:`RDD.fullOuterJoin`

| :meth:`RDD.cogroup`

| :meth:`RDD.groupWith`

| :meth:`pyspark.sql.DataFrame.join`

|

| Examples

| --------

| >>> rdd1 = sc.parallelize([("a", 1), ("b", 4)])

| >>> rdd2 = sc.parallelize([("a", 2), ("a", 3)])

| >>> sorted(rdd1.join(rdd2).collect())

| [('a', (1, 2)), ('a', (1, 3))]

|

| keyBy(self: 'RDD[T]', f: Callable[[~T], ~K]) -> 'RDD[Tuple[K, T]]'

| Creates tuples of the elements in this RDD by applying `f`.

|

| .. versionadded:: 0.9.1

|

| Parameters

| ----------

| f : function

| a function to compute the key

|

| Returns

| -------

| :class:`RDD`

| a :class:`RDD` with the elements from this that are not in `other`

|

| See Also

| --------

| :meth:`RDD.map`

| :meth:`RDD.keys`

| :meth:`RDD.values`

|

| Examples

| --------

| >>> rdd1 = sc.parallelize(range(0,3)).keyBy(lambda x: x*x)

| >>> rdd2 = sc.parallelize(zip(range(0,5), range(0,5)))

| >>> [(x, list(map(list, y))) for x, y in sorted(rdd1.cogroup(rdd2).collect())]

| [(0, [[0], [0]]), (1, [[1], [1]]), (2, [[], [2]]), (3, [[], [3]]), (4, [[2], [4]])]

|

| keys(self: 'RDD[Tuple[K, V]]') -> 'RDD[K]'

| Return an RDD with the keys of each tuple.

|

| .. versionadded:: 0.7.0

|

| Returns

| -------

| :class:`RDD`

| a :class:`RDD` only containing the keys

|

| See Also

| --------

| :meth:`RDD.values`

|

| Examples

| --------

| >>> rdd = sc.parallelize([(1, 2), (3, 4)]).keys()

| >>> rdd.collect()

| [1, 3]

|

| leftOuterJoin(self: 'RDD[Tuple[K, V]]', other: 'RDD[Tuple[K, U]]', numPartitions: Optional[int] = None) -> 'RDD[Tuple[K, Tuple[V, Optional[U]]]]'

| Perform a left outer join of `self` and `other`.

|

| For each element (k, v) in `self`, the resulting RDD will either

| contain all pairs (k, (v, w)) for w in `other`, or the pair

| (k, (v, None)) if no elements in `other` have key k.

|

| Hash-partitions the resulting RDD into the given number of partitions.

|

| .. versionadded:: 0.7.0

|

| Parameters

| ----------

| other : :class:`RDD`

| another :class:`RDD`

| numPartitions : int, optional

| the number of partitions in new :class:`RDD`

|

| Returns

| -------

| :class:`RDD`

| a :class:`RDD` containing all pairs of elements with matching keys

|

| See Also

| --------

| :meth:`RDD.join`

| :meth:`RDD.rightOuterJoin`

| :meth:`RDD.fullOuterJoin`

| :meth:`pyspark.sql.DataFrame.join`

|

| Examples

| --------

| >>> rdd1 = sc.parallelize([("a", 1), ("b", 4)])

| >>> rdd2 = sc.parallelize([("a", 2)])

| >>> sorted(rdd1.leftOuterJoin(rdd2).collect())

| [('a', (1, 2)), ('b', (4, None))]

|

| localCheckpoint(self) -> None

| Mark this RDD for local checkpointing using Spark's existing caching layer.

|

| This method is for users who wish to truncate RDD lineages while skipping the expensive

| step of replicating the materialized data in a reliable distributed file system. This is

| useful for RDDs with long lineages that need to be truncated periodically (e.g. GraphX).

|

| Local checkpointing sacrifices fault-tolerance for performance. In particular, checkpointed

| data is written to ephemeral local storage in the executors instead of to a reliable,

| fault-tolerant storage. The effect is that if an executor fails during the computation,

| the checkpointed data may no longer be accessible, causing an irrecoverable job failure.

|

| This is NOT safe to use with dynamic allocation, which removes executors along

| with their cached blocks. If you must use both features, you are advised to set

| `spark.dynamicAllocation.cachedExecutorIdleTimeout` to a high value.

|

| The checkpoint directory set through :meth:`SparkContext.setCheckpointDir` is not used.

|

| .. versionadded:: 2.2.0

|

| See Also

| --------

| :meth:`RDD.checkpoint`

| :meth:`RDD.isLocallyCheckpointed`

|

| Examples

| --------

| >>> rdd = sc.range(5)

| >>> rdd.isLocallyCheckpointed()

| False

|

| >>> rdd.localCheckpoint()

| >>> rdd.isLocallyCheckpointed()

| True

|

| lookup(self: 'RDD[Tuple[K, V]]', key: ~K) -> List[~V]

| Return the list of values in the RDD for key `key`. This operation

| is done efficiently if the RDD has a known partitioner by only

| searching the partition that the key maps to.

|

| .. versionadded:: 1.2.0

|

| Parameters

| ----------

| key : K

| the key to look up

|

| Returns

| -------

| list

| the list of values in the :class:`RDD` for key `key`

|

| Examples

| --------

| >>> l = range(1000)

| >>> rdd = sc.parallelize(zip(l, l), 10)

| >>> rdd.lookup(42) # slow

| [42]

| >>> sorted = rdd.sortByKey()

| >>> sorted.lookup(42) # fast

| [42]

| >>> sorted.lookup(1024)

| []

| >>> rdd2 = sc.parallelize([(('a', 'b'), 'c')]).groupByKey()

| >>> list(rdd2.lookup(('a', 'b'))[0])

| ['c']

|

| map(self: 'RDD[T]', f: Callable[[~T], ~U], preservesPartitioning: bool = False) -> 'RDD[U]'

| Return a new RDD by applying a function to each element of this RDD.

|

| .. versionadded:: 0.7.0

|

| Parameters

| ----------

| f : function

| a function to run on each element of the RDD

| preservesPartitioning : bool, optional, default False

| indicates whether the input function preserves the partitioner,

| which should be False unless this is a pair RDD and the input

|

| Returns

| -------

| :class:`RDD`

| a new :class:`RDD` by applying a function to all elements

|

| See Also

| --------

| :meth:`RDD.flatMap`

| :meth:`RDD.mapPartitions`

| :meth:`RDD.mapPartitionsWithIndex`

| :meth:`RDD.mapPartitionsWithSplit`

|

| Examples

| --------

| >>> rdd = sc.parallelize(["b", "a", "c"])

| >>> sorted(rdd.map(lambda x: (x, 1)).collect())

| [('a', 1), ('b', 1), ('c', 1)]

|

| mapPartitions(self: 'RDD[T]', f: Callable[[Iterable[~T]], Iterable[~U]], preservesPartitioning: bool = False) -> 'RDD[U]'

| Return a new RDD by applying a function to each partition of this RDD.

|

| .. versionadded:: 0.7.0

|

| Parameters

| ----------

| f : function

| a function to run on each partition of the RDD

| preservesPartitioning : bool, optional, default False

| indicates whether the input function preserves the partitioner,

| which should be False unless this is a pair RDD and the input

|

| Returns

| -------

| :class:`RDD`

| a new :class:`RDD` by applying a function to each partition

|

| See Also

| --------

| :meth:`RDD.map`

| :meth:`RDD.flatMap`

| :meth:`RDD.mapPartitionsWithIndex`

| :meth:`RDD.mapPartitionsWithSplit`

| :meth:`RDDBarrier.mapPartitions`

|

| Examples

| --------

| >>> rdd = sc.parallelize([1, 2, 3, 4], 2)

| >>> def f(iterator): yield sum(iterator)

| ...

| >>> rdd.mapPartitions(f).collect()

| [3, 7]

|

| mapPartitionsWithIndex(self: 'RDD[T]', f: Callable[[int, Iterable[~T]], Iterable[~U]], preservesPartitioning: bool = False) -> 'RDD[U]'

| Return a new RDD by applying a function to each partition of this RDD,

| while tracking the index of the original partition.

|

| .. versionadded:: 0.7.0

|

| Parameters

| ----------

| f : function

| a function to run on each partition of the RDD

| preservesPartitioning : bool, optional, default False

| indicates whether the input function preserves the partitioner,

| which should be False unless this is a pair RDD and the input

|

| Returns

| -------

| :class:`RDD`

| a new :class:`RDD` by applying a function to each partition

|

| See Also

| --------

| :meth:`RDD.map`

| :meth:`RDD.flatMap`

| :meth:`RDD.mapPartitions`

| :meth:`RDD.mapPartitionsWithSplit`

| :meth:`RDDBarrier.mapPartitionsWithIndex`

|

| Examples

| --------

| >>> rdd = sc.parallelize([1, 2, 3, 4], 4)

| >>> def f(splitIndex, iterator): yield splitIndex

| ...

| >>> rdd.mapPartitionsWithIndex(f).sum()

| 6

|

| mapPartitionsWithSplit(self: 'RDD[T]', f: Callable[[int, Iterable[~T]], Iterable[~U]], preservesPartitioning: bool = False) -> 'RDD[U]'

| Return a new RDD by applying a function to each partition of this RDD,

| while tracking the index of the original partition.

|

| .. versionadded:: 0.7.0

|

| .. deprecated:: 0.9.0

| use meth:`RDD.mapPartitionsWithIndex` instead.

|

| Parameters

| ----------

| f : function

| a function to run on each partition of the RDD

| preservesPartitioning : bool, optional, default False

| indicates whether the input function preserves the partitioner,

| which should be False unless this is a pair RDD and the input

|

| Returns

| -------

| :class:`RDD`

| a new :class:`RDD` by applying a function to each partition

|

| See Also

| --------

| :meth:`RDD.map`

| :meth:`RDD.flatMap`

| :meth:`RDD.mapPartitions`

| :meth:`RDD.mapPartitionsWithIndex`

|

| Examples

| --------

| >>> rdd = sc.parallelize([1, 2, 3, 4], 4)

| >>> def f(splitIndex, iterator): yield splitIndex

| ...

| >>> rdd.mapPartitionsWithSplit(f).sum()

| 6

|

| mapValues(self: 'RDD[Tuple[K, V]]', f: Callable[[~V], ~U]) -> 'RDD[Tuple[K, U]]'

| Pass each value in the key-value pair RDD through a map function

| without changing the keys; this also retains the original RDD's

| partitioning.

|

| .. versionadded:: 0.7.0

|

| Parameters

| ----------

| f : function

| a function to turn a V into a U

|

| Returns

| -------

| :class:`RDD`

| a :class:`RDD` containing the keys and the mapped value

|

| See Also

| --------

| :meth:`RDD.map`

| :meth:`RDD.flatMapValues`

|

| Examples

| --------

| >>> rdd = sc.parallelize([("a", ["apple", "banana", "lemon"]), ("b", ["grapes"])])

| >>> def f(x): return len(x)

| ...

| >>> rdd.mapValues(f).collect()

| [('a', 3), ('b', 1)]

|

| max(self: 'RDD[T]', key: Optional[Callable[[~T], ForwardRef('S')]] = None) -> ~T

| Find the maximum item in this RDD.

|

| .. versionadded:: 1.0.0

|

| Parameters

| ----------

| key : function, optional

| A function used to generate key for comparing

|

| Returns

| -------

| T

| the maximum item

|

| See Also

| --------

| :meth:`RDD.min`

|

| Examples

| --------

| >>> rdd = sc.parallelize([1.0, 5.0, 43.0, 10.0])

| >>> rdd.max()

| 43.0

| >>> rdd.max(key=str)

| 5.0

|

| mean(self: 'RDD[NumberOrArray]') -> float

| Compute the mean of this RDD's elements.

|

| .. versionadded:: 0.9.1

|

| Returns

| -------

| float

| the mean of all elements

|

| See Also

| --------

| :meth:`RDD.stats`

| :meth:`RDD.sum`

| :meth:`RDD.meanApprox`

|

| Examples

| --------

| >>> sc.parallelize([1, 2, 3]).mean()

| 2.0

|

| meanApprox(self: 'RDD[Union[float, int]]', timeout: int, confidence: float = 0.95) -> pyspark.rdd.BoundedFloat

| Approximate operation to return the mean within a timeout

| or meet the confidence.

|

| .. versionadded:: 1.2.0

|

| Parameters

| ----------

| timeout : int

| maximum time to wait for the job, in milliseconds

| confidence : float

| the desired statistical confidence in the result

|

| Returns

| -------

| :class:`BoundedFloat`

| a potentially incomplete result, with error bounds

|

| See Also

| --------

| :meth:`RDD.mean`

|

| Examples

| --------

| >>> rdd = sc.parallelize(range(1000), 10)

| >>> r = sum(range(1000)) / 1000.0

| >>> abs(rdd.meanApprox(1000) - r) / r < 0.05

| True

|

| min(self: 'RDD[T]', key: Optional[Callable[[~T], ForwardRef('S')]] = None) -> ~T

| Find the minimum item in this RDD.

|

| .. versionadded:: 1.0.0

|

| Parameters

| ----------

| key : function, optional

| A function used to generate key for comparing

|

| Returns

| -------

| T

| the minimum item

|

| See Also

| --------

| :meth:`RDD.max`

|

| Examples

| --------

| >>> rdd = sc.parallelize([2.0, 5.0, 43.0, 10.0])

| >>> rdd.min()

| 2.0

| >>> rdd.min(key=str)

| 10.0

|

| name(self) -> Optional[str]

| Return the name of this RDD.

|

| .. versionadded:: 1.0.0

|

| Returns

| -------

| str

| :class:`RDD` name

|

| See Also

| --------

| :meth:`RDD.setName`

|

| Examples

| --------

| >>> rdd = sc.range(5)

| >>> rdd.name() == None

| True

|

| partitionBy(self: 'RDD[Tuple[K, V]]', numPartitions: Optional[int], partitionFunc: Callable[[~K], int] = <function portable_hash at 0x7d0ad45511c0>) -> 'RDD[Tuple[K, V]]'

| Return a copy of the RDD partitioned using the specified partitioner.

|

| .. versionadded:: 0.7.0

|

| Parameters

| ----------

| numPartitions : int, optional

| the number of partitions in new :class:`RDD`

| partitionFunc : function, optional, default `portable_hash`

| function to compute the partition index

|

| Returns

| -------

| :class:`RDD`

| a :class:`RDD` partitioned using the specified partitioner

|

| See Also

| --------

| :meth:`RDD.repartition`

| :meth:`RDD.repartitionAndSortWithinPartitions`

|

| Examples

| --------

| >>> pairs = sc.parallelize([1, 2, 3, 4, 2, 4, 1]).map(lambda x: (x, x))

| >>> sets = pairs.partitionBy(2).glom().collect()

| >>> len(set(sets[0]).intersection(set(sets[1])))

| 0

|

| persist(self: 'RDD[T]', storageLevel: pyspark.storagelevel.StorageLevel = StorageLevel(False, True, False, False, 1)) -> 'RDD[T]'

| Set this RDD's storage level to persist its values across operations

| after the first time it is computed. This can only be used to assign

| a new storage level if the RDD does not have a storage level set yet.

| If no storage level is specified defaults to (`MEMORY_ONLY`).

|

| .. versionadded:: 0.9.1

|

| Parameters

| ----------

| storageLevel : :class:`StorageLevel`, default `MEMORY_ONLY`

| the target storage level

|

| Returns

| -------

| :class:`RDD`

| The same :class:`RDD` with storage level set to `storageLevel`.

|

| See Also

| --------

| :meth:`RDD.cache`

| :meth:`RDD.unpersist`

| :meth:`RDD.getStorageLevel`

|

| Examples

| --------

| >>> rdd = sc.parallelize(["b", "a", "c"])

| >>> rdd.persist().is_cached

| True

| >>> str(rdd.getStorageLevel())

| 'Memory Serialized 1x Replicated'

| >>> _ = rdd.unpersist()

| >>> rdd.is_cached

| False

|

| >>> from pyspark import StorageLevel

| >>> rdd2 = sc.range(5)

| >>> _ = rdd2.persist(StorageLevel.MEMORY_AND_DISK)

| >>> rdd2.is_cached

| True

| >>> str(rdd2.getStorageLevel())

| 'Disk Memory Serialized 1x Replicated'

|

| Can not override existing storage level

|

| >>> _ = rdd2.persist(StorageLevel.MEMORY_ONLY_2)

| Traceback (most recent call last):

| ...

| py4j.protocol.Py4JJavaError: ...

|

| Assign another storage level after `unpersist`

|

| >>> _ = rdd2.unpersist()

| >>> rdd2.is_cached

| False

| >>> _ = rdd2.persist(StorageLevel.MEMORY_ONLY_2)

| >>> str(rdd2.getStorageLevel())

| 'Memory Serialized 2x Replicated'

| >>> rdd2.is_cached

| True

| >>> _ = rdd2.unpersist()

|

| pipe(self, command: str, env: Optional[Dict[str, str]] = None, checkCode: bool = False) -> 'RDD[str]'

| Return an RDD created by piping elements to a forked external process.

|

| .. versionadded:: 0.7.0

|

| Parameters

| ----------

| command : str

| command to run.

| env : dict, optional

| environment variables to set.

| checkCode : bool, optional

| whether to check the return value of the shell command.

|

| Returns

| -------

| :class:`RDD`

| a new :class:`RDD` of strings

|

| Examples

| --------

| >>> sc.parallelize(['1', '2', '', '3']).pipe('cat').collect()

| ['1', '2', '', '3']

|

| randomSplit(self: 'RDD[T]', weights: Sequence[Union[int, float]], seed: Optional[int] = None) -> 'List[RDD[T]]'

| Randomly splits this RDD with the provided weights.

|

| .. versionadded:: 1.3.0

|

| Parameters

| ----------

| weights : list

| weights for splits, will be normalized if they don't sum to 1

| seed : int, optional

| random seed

|

| Returns

| -------

| list

| split :class:`RDD`\s in a list

|

| See Also

| --------

| :meth:`pyspark.sql.DataFrame.randomSplit`

|

| Examples

| --------

| >>> rdd = sc.parallelize(range(500), 1)

| >>> rdd1, rdd2 = rdd.randomSplit([2, 3], 17)

| >>> len(rdd1.collect() + rdd2.collect())

| 500

| >>> 150 < rdd1.count() < 250

| True

| >>> 250 < rdd2.count() < 350

| True

|

| reduce(self: 'RDD[T]', f: Callable[[~T, ~T], ~T]) -> ~T

| Reduces the elements of this RDD using the specified commutative and

| associative binary operator. Currently reduces partitions locally.

|

| .. versionadded:: 0.7.0

|

| Parameters

| ----------

| f : function

| the reduce function

|

| Returns

| -------

| T

| the aggregated result

|

| See Also

| --------

| :meth:`RDD.treeReduce`

| :meth:`RDD.aggregate`

| :meth:`RDD.treeAggregate`

|

| Examples

| --------

| >>> from operator import add

| >>> sc.parallelize([1, 2, 3, 4, 5]).reduce(add)

| 15

| >>> sc.parallelize((2 for _ in range(10))).map(lambda x: 1).cache().reduce(add)

| 10

| >>> sc.parallelize([]).reduce(add)

| Traceback (most recent call last):

| ...

| ValueError: Can not reduce() empty RDD

|

| reduceByKey(self: 'RDD[Tuple[K, V]]', func: Callable[[~V, ~V], ~V], numPartitions: Optional[int] = None, partitionFunc: Callable[[~K], int] = <function portable_hash at 0x7d0ad45511c0>) -> 'RDD[Tuple[K, V]]'

| Merge the values for each key using an associative and commutative reduce function.

|

| This will also perform the merging locally on each mapper before

| sending results to a reducer, similarly to a "combiner" in MapReduce.

|

| Output will be partitioned with `numPartitions` partitions, or

| the default parallelism level if `numPartitions` is not specified.

| Default partitioner is hash-partition.

|

| .. versionadded:: 1.6.0

|

| Parameters

| ----------

| func : function

| the reduce function

| numPartitions : int, optional

| the number of partitions in new :class:`RDD`

| partitionFunc : function, optional, default `portable_hash`

| function to compute the partition index

|

| Returns

| -------

| :class:`RDD`

| a :class:`RDD` containing the keys and the aggregated result for each key

|

| See Also

| --------

| :meth:`RDD.reduceByKeyLocally`

| :meth:`RDD.combineByKey`

| :meth:`RDD.aggregateByKey`

| :meth:`RDD.foldByKey`

| :meth:`RDD.groupByKey`

|

| Examples

| --------

| >>> from operator import add

| >>> rdd = sc.parallelize([("a", 1), ("b", 1), ("a", 1)])

| >>> sorted(rdd.reduceByKey(add).collect())

| [('a', 2), ('b', 1)]

|

| reduceByKeyLocally(self: 'RDD[Tuple[K, V]]', func: Callable[[~V, ~V], ~V]) -> Dict[~K, ~V]

| Merge the values for each key using an associative and commutative reduce function, but

| return the results immediately to the master as a dictionary.

|

| This will also perform the merging locally on each mapper before

| sending results to a reducer, similarly to a "combiner" in MapReduce.

|

| .. versionadded:: 0.7.0

|

| Parameters

| ----------

| func : function

| the reduce function

|

| Returns

| -------

| dict

| a dict containing the keys and the aggregated result for each key

|

| See Also

| --------

| :meth:`RDD.reduceByKey`

| :meth:`RDD.aggregateByKey`

|

| Examples

| --------

| >>> from operator import add

| >>> rdd = sc.parallelize([("a", 1), ("b", 1), ("a", 1)])

| >>> sorted(rdd.reduceByKeyLocally(add).items())

| [('a', 2), ('b', 1)]

|

| repartition(self: 'RDD[T]', numPartitions: int) -> 'RDD[T]'

| Return a new RDD that has exactly numPartitions partitions.

|

| Can increase or decrease the level of parallelism in this RDD.

| Internally, this uses a shuffle to redistribute data.

| If you are decreasing the number of partitions in this RDD, consider

| using `coalesce`, which can avoid performing a shuffle.

|

| .. versionadded:: 1.0.0

|

| Parameters

| ----------

| numPartitions : int, optional

| the number of partitions in new :class:`RDD`

|

| Returns

| -------

| :class:`RDD`

| a :class:`RDD` with exactly numPartitions partitions

|

| See Also

| --------

| :meth:`RDD.coalesce`

| :meth:`RDD.partitionBy`

| :meth:`RDD.repartitionAndSortWithinPartitions`

|

| Examples

| --------

| >>> rdd = sc.parallelize([1,2,3,4,5,6,7], 4)

| >>> sorted(rdd.glom().collect())

| [[1], [2, 3], [4, 5], [6, 7]]

| >>> len(rdd.repartition(2).glom().collect())

| 2

| >>> len(rdd.repartition(10).glom().collect())

| 10

|